Looking for a humble AI

During the last weekend, I’ve watched the new documentary on Netflix - “The Social Dilemma”. The (highly recommended) movie describes how social companies (Google, Facebook, Twitter, Pinterest, etc.) mine our attention in order to predict our actions and sell ads based on those predictions. Most of the people in the documentary are former employees - engineers, data scientists, execs. They all started with trying to build a product which was not supposed to be evil, but ended up with unintended consequences - growing political polarization, body image issues with teens and of course - pure and simple addiction to the infinite scroll. At one point, one of the engineers commented - “You as a human with your hardware which hasn’t changed in millions of years, are competing against an AI with exponentially growing computing power - you don’t stand a chance” (I’m paraphrasing wildly here). This movie got me thinking a lot about the humility required for us when building and using AI systems.

A few months ago, I joined Anodot. A company which at its heart is an AI based company. Co-founded by Dr. Ira Cohen, a leading data scientist and one of the smartest (and nicest!) people I’ve worked with - the company has numerous patents around machine learning algorithms for anomaly detection, business monitoring and prediction. Working with Ira’s team has certainly not made me a data scientist by any means, but I’ve learned from him how easy it is to assume that AI/ML* can answer questions which it can not. Ira and his team have this humbleness in them of deeply understanding that AI is a tool which can only do so much. With more and more AI implementations I’m seeing around me - people lose this humility and just entrust their judgement to the algorithm.

Here are a couple of examples from recent weeks - Grading Students exams by AI - which turned out to be easily hackable is amusing. But this one is really concerning - The Pasco county sheriff department created an algorithm to predict which resident is likely to commit a crime and officers went to the homes of people singled out by the algorithm, charged them with zoning violations, and made arrests for any reason they could. Those charges were fed back into the algorithm. This creates a vicious cycle which is just scary.

Those of us who spent some time in the HR space know this problem. You set a KPI / MBO for your employees and make their bonus dependant on it - and their sole purpose will now be to meet that KPI. Regardless of whether its for the good of the company or not. If you tell a product manager their bonus is a function of how many customer meetings they conduct - you can bet they will get that number. Will they derive meaningful input from those meetings? Will they even listen? No way to know. ML is the the same only worse - You define a target function of things which it should ‘decide’ or ‘predict’ - and it will fine tune the algorithm to meet exactly the KPI you defined, with no moral qualms or view of the bigger picture.

Yuval Noah Harari is warning us that AI is as threatening as climate change and nuclear war. I’m not sure I share his level of concern (he probably has the better perspective on things than I). I do know that ML is changing our world and our ability to process vast amounts of data. As product managers, execs and engineers - It does not unburden us from the need to have some humility. You really don’t want to be in that next documentary saying “We never intended”.

* Throughout this text I use “ML” and “AI” rather loosely. This blog gives a good explanation on the difference between the two - https://www.anodot.com/blog/what-is-ai-ml/

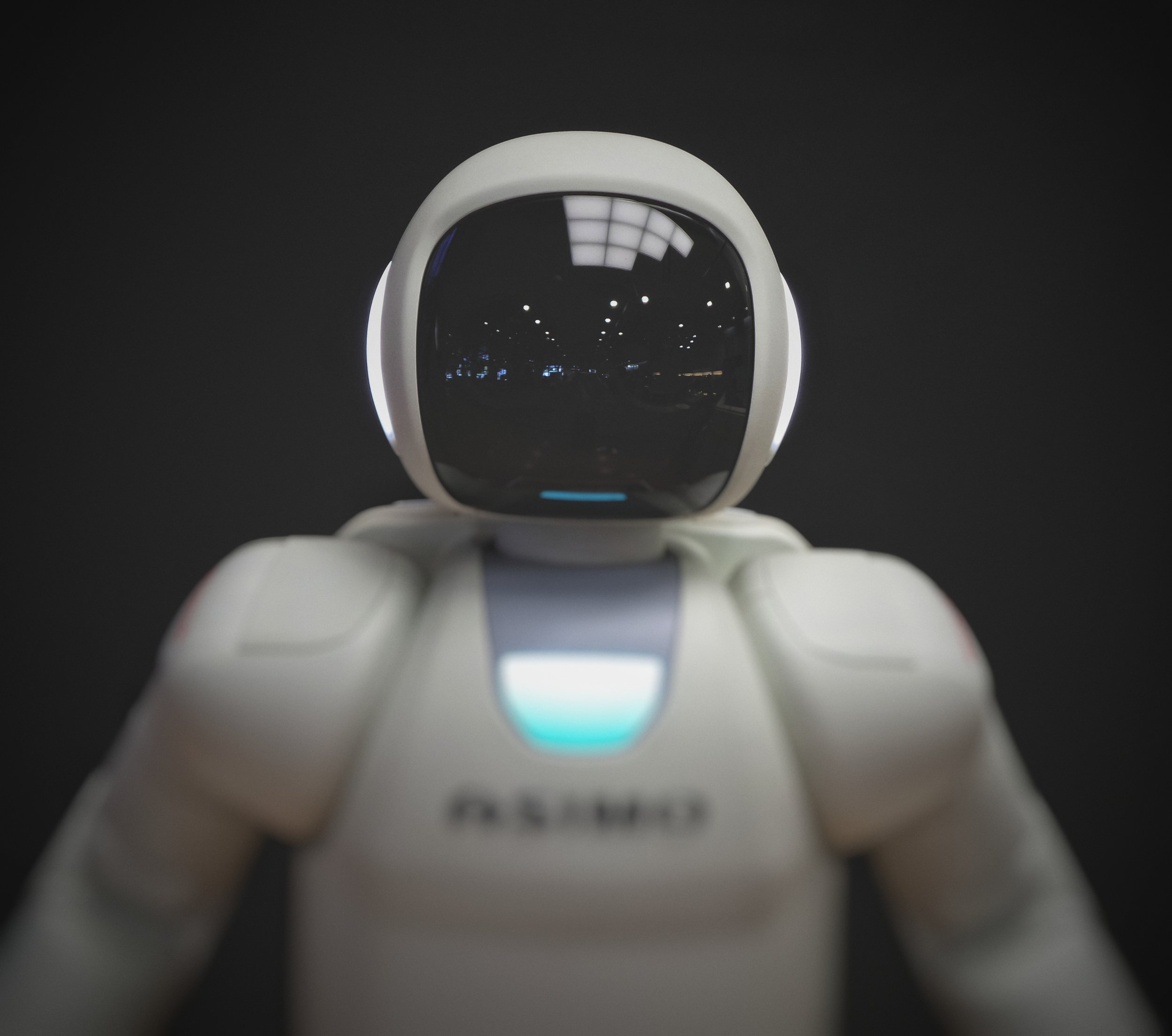

* Robot image courtesy of Photos Hobby on Unsplash